The viewer is disabled because this dataset repo requires arbitrary Python code execution. Please consider

removing the

loading script

and relying on

automated data support

(you can use

convert_to_parquet

from the datasets library). If this is not possible, please

open a discussion

for direct help.

VALERIE22 - A photorealistic, richly metadata annotated dataset of urban environments

Dataset Summary

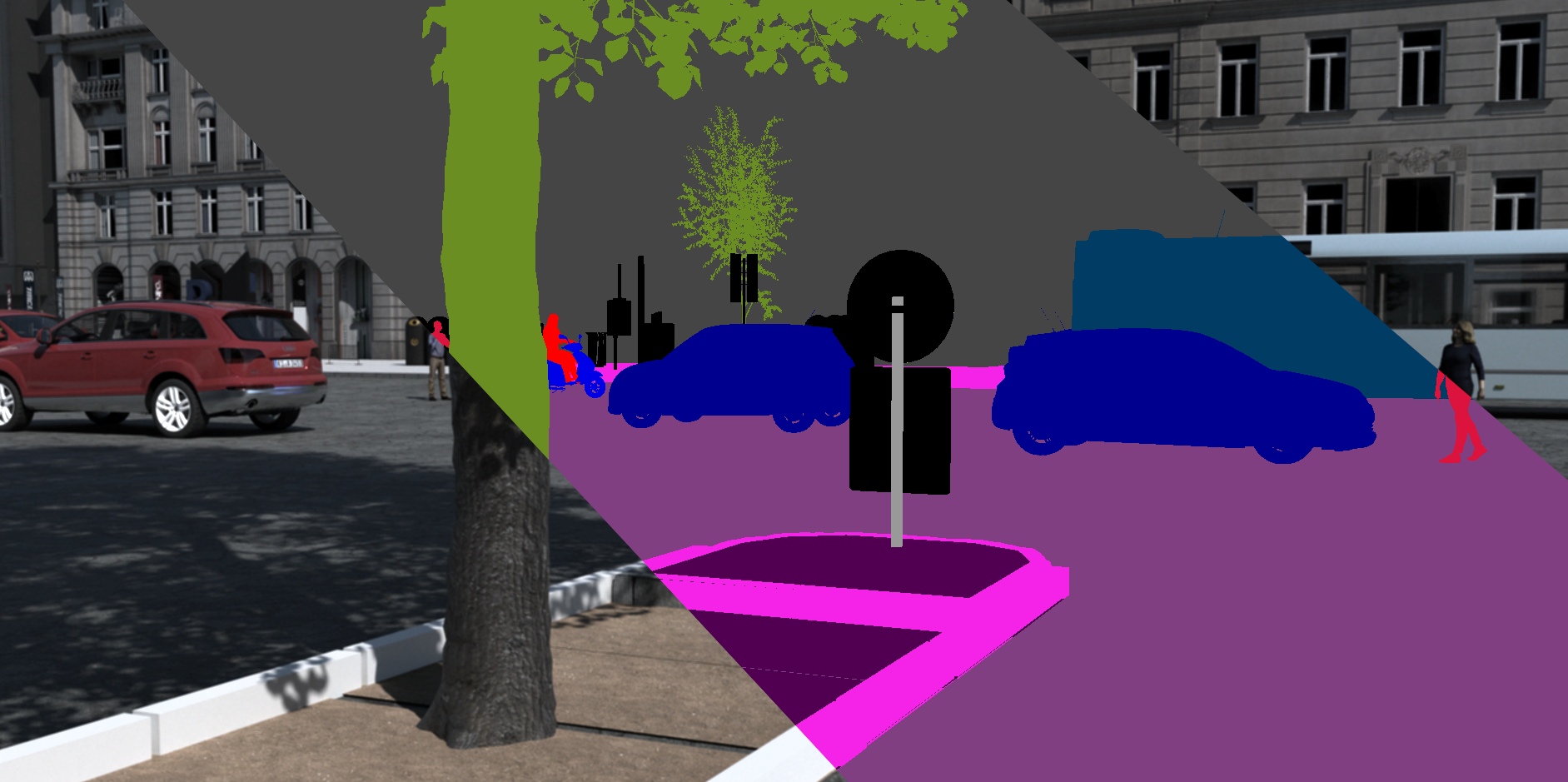

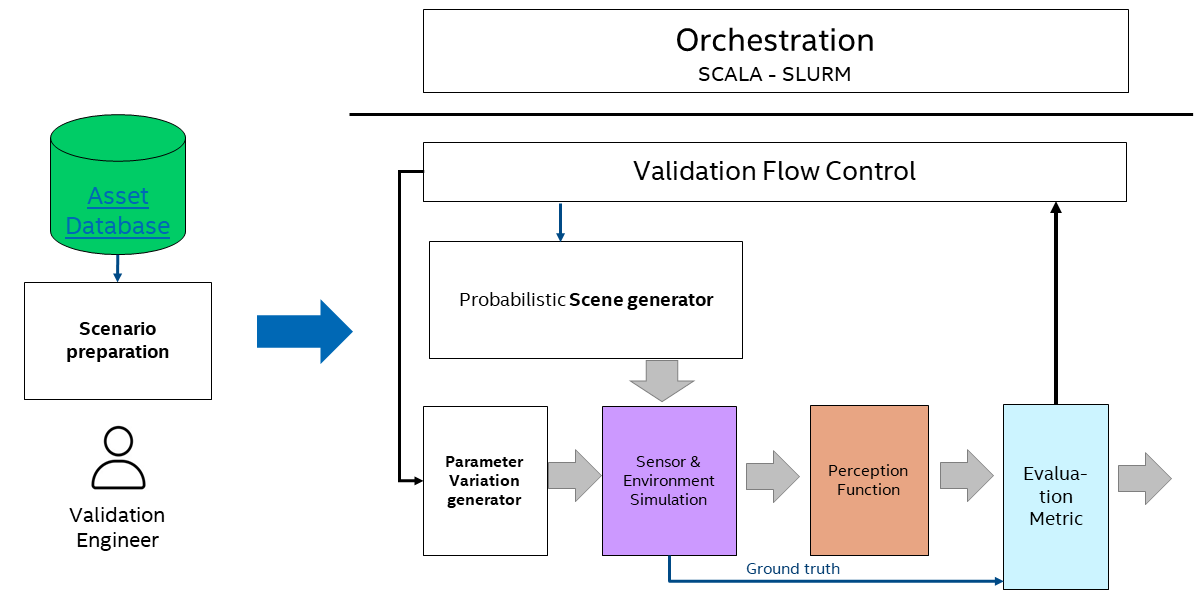

The VALERIE22 dataset was generated with the VALERIE procedural tools pipeline (see image below) providing a photorealistic sensor simulation rendered from automatically synthesized scenes. The dataset provides a uniquely rich set of metadata, allowing extraction of specific scene and semantic features (like pixel-accurate occlusion rates, positions in the scene and distance + angle to the camera). This enables a multitude of possible tests on the data and we hope to stimulate research on understanding performance of DNNs.

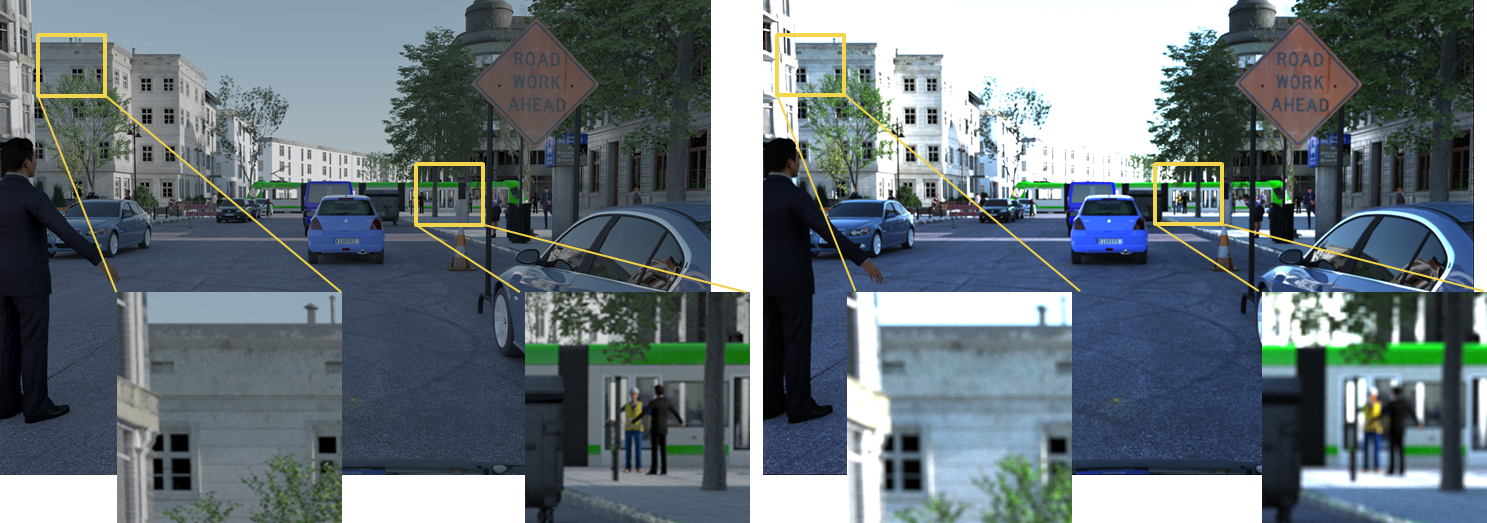

Each sequence of the dataset contains for each scene two rendered images. One is rendered with the default Blender tonemapping (/png) whereas the second is renderd with our photorealistic sensor simulation (see hagn2022optimized). The image below shows the difference of the two methods.

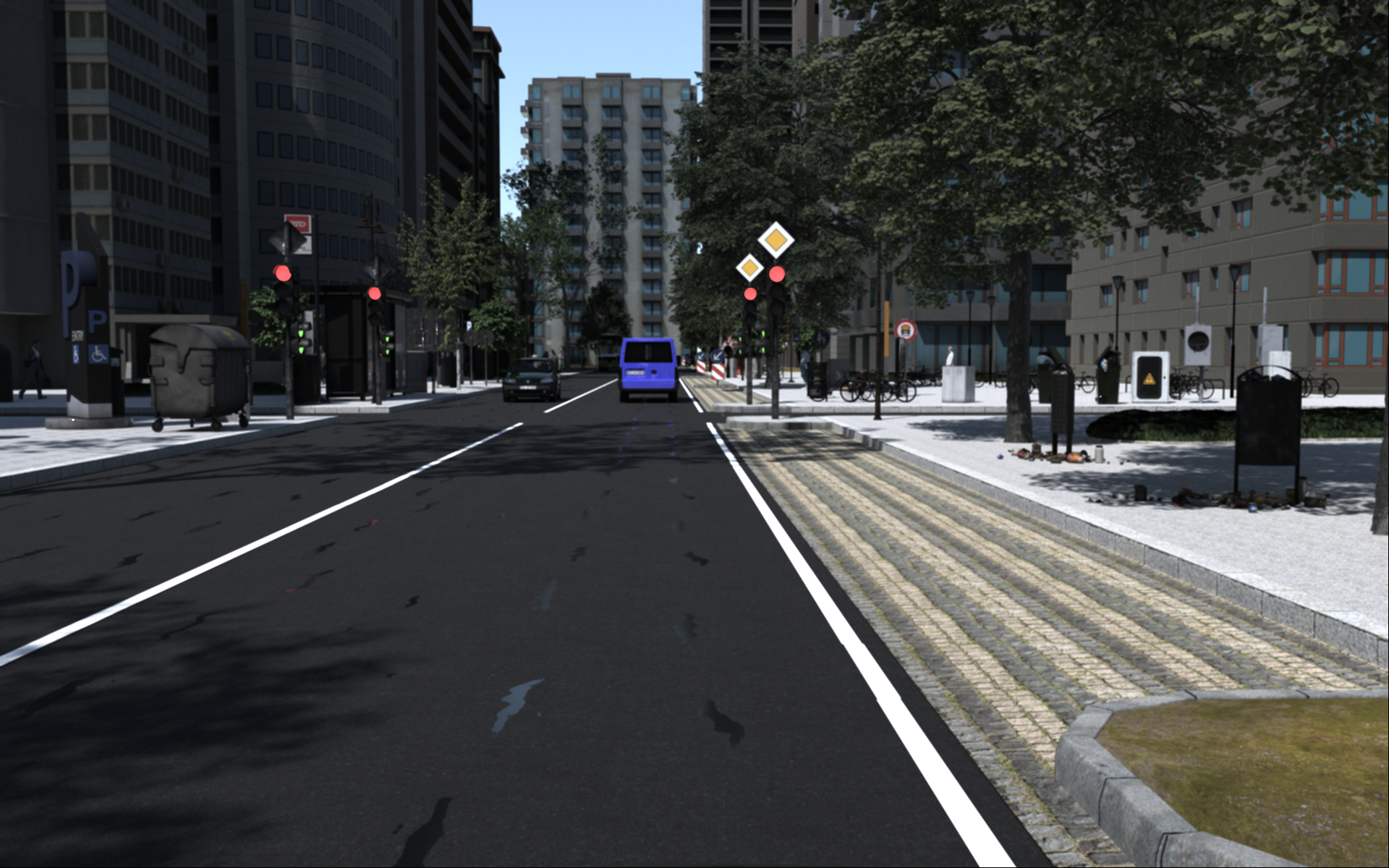

Following are some example images showing the unique characteristics of the different sequences.

| Sequence0052 | Sequence0054 | Sequence0057 | Sequence0058 |

|---|---|---|---|

|

|

|

|

| Sequence0059 | Sequence0060 | Sequence0062 |

|---|---|---|

|

|

|

Supported Tasks

- pedestrian detection

- 2d object-detection

- 3d object-detection

- semantic-segmentation

- instance-segmentation

- ai-validation

Dataset Structure

VALERIE22

└───intel_results_sequence_0050

│ └───ground-truth

│ │ └───2d-bounding-box_json

│ │ │ └───car-camera000-0000-{UUID}-0000.json

│ │ └───3d-bounding-box_json

│ │ │ └───car-camera000-0000-{UUID}-0000.json

│ │ └───class-id_png

│ │ │ └───car-camera000-0000-{UUID}-0000.png

│ │ └───general-globally-per-frame-analysis_json

│ │ │ └───car-camera000-0000-{UUID}-0000.json

│ │ │ └───car-camera000-0000-{UUID}-0000.csv

│ │ └───semantic-group-segmentation_png

│ │ │ └───car-camera000-0000-{UUID}-0000.png

│ │ └───semantic-instance-segmentation_png

│ │ │ └───car-camera000-0000-{UUID}-0000.png

│ │ │ └───car-camera000-0000-{UUID}-0000

│ │ │ │ └───{Entity-ID}

│ └───sensor

│ │ └───camera

│ │ │ └───left

│ │ │ │ └───png

│ │ │ │ │ └───car-camera000-0000-{UUID}-0000.png

│ │ │ │ └───png_distorted

│ │ │ │ │ └───car-camera000-0000-{UUID}-0000.png

└───intel_results_sequence_0052

└───intel_results_sequence_0054

└───intel_results_sequence_0057

└───intel_results_sequence_0058

└───intel_results_sequence_0059

└───intel_results_sequence_0060

└───intel_results_sequence_0062

Data Splits

13476 images for trainining:

dataset = load_dataset("Intel/VALERIE22", split="train")

8406 images for validation and test:

dataset = load_dataset("Intel/VALERIE22", split="validation")

dataset = load_dataset("Intel/VALERIE22", split="test")

Licensing Information

CC BY 4.0

Grant Information

Generated within project KI-Abischerung with funding of the German Federal Ministry of Industry and Energy under grant number 19A19005M.

Citation Information

Relevant publications:

@misc{grau2023valerie22,

title={VALERIE22 -- A photorealistic, richly metadata annotated dataset of urban environments},

author={Oliver Grau and Korbinian Hagn},

year={2023},

eprint={2308.09632},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{hagn2022increasing,

title={Increasing pedestrian detection performance through weighting of detection impairing factors},

author={Hagn, Korbinian and Grau, Oliver},

booktitle={Proceedings of the 6th ACM Computer Science in Cars Symposium},

pages={1--10},

year={2022}

}

@inproceedings{hagn2022validation,

title={Validation of Pedestrian Detectors by Classification of Visual Detection Impairing Factors},

author={Hagn, Korbinian and Grau, Oliver},

booktitle={European Conference on Computer Vision},

pages={476--491},

year={2022},

organization={Springer}

}

@incollection{grau2022variational,

title={A variational deep synthesis approach for perception validation},

author={Grau, Oliver and Hagn, Korbinian and Syed Sha, Qutub},

booktitle={Deep Neural Networks and Data for Automated Driving: Robustness, Uncertainty Quantification, and Insights Towards Safety},

pages={359--381},

year={2022},

publisher={Springer International Publishing Cham}

}

@incollection{hagn2022optimized,

title={Optimized data synthesis for DNN training and validation by sensor artifact simulation},

author={Hagn, Korbinian and Grau, Oliver},

booktitle={Deep Neural Networks and Data for Automated Driving: Robustness, Uncertainty Quantification, and Insights Towards Safety},

pages={127--147},

year={2022},

publisher={Springer International Publishing Cham}

}

@inproceedings{syed2020dnn,

title={DNN analysis through synthetic data variation},

author={Syed Sha, Qutub and Grau, Oliver and Hagn, Korbinian},

booktitle={Proceedings of the 4th ACM Computer Science in Cars Symposium},

pages={1--10},

year={2020}

}

- Downloads last month

- 4